Leverage

Let's say our game spends 90% of the time drawing the 3-d world and 10% drawing the UI. Your leverage ratios are therefore 0.9 for 3-d and 0.1 for the UI. Those are the scaling factors that discount the value of your optimizations. So a 1% optimization to the rendering engine will give you a 0.9% speed boost overall, while a 1% optimization to the UI will give you only a 0.1% speed boost overall.

So my first answer is: 1% is not a lot unless you have a lot of leverage. If your leverage ratio is 0.05 you don't have a lot of leverage, and even a 20% optimization isn't going to produce noticeable results.

This is why using an adaptive sampling profiler like Shark is so important. A Shark time profile is your code sorted by leverage. Let's take a look at a real Shark profile to see this.

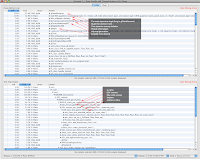

This is a Shark profile of X-Plane 9.62 on my Mac Pro in an external view with pretty much the default settings. I have used Shark's data mining features to collapse and clean the display. Basically time spent in the sub-parts of the OpenGL driver have been merged into libGL.dylib and libSys is merged to whomever calls it. We might not do this for other types of optimizations, but here we just want to see "who is expensive" and whether it's us or the GL.

The profile is "Timed Profile (All Thread States)" for just the app. This captures time spent blocking. Since X-Plane's frame-rate (which is what we want to make fast) is limited by how long it takes to go around the loop including blocking, we have to look at blocking and focus on the main thread. If we were totally CPU bound (e.g. other worker threads were using more than total available CPU resources) we might simply look at CPU use for all non-blocking threads. But that's another profile.

What do we see? In the bottoms-up view we can see our top offenders, and how much leverage we get if we can improve them. In order of maximum leverage:

glDrawElements! With 35.6% of thread time (that is, a leverage ratio of 0.356) the biggest single thing we could do to make X-Plane faster is to make glDrawElements execute faster. 0.356 is a huge number for an app that's already been beaten into an inch of its life with Shark for a few years.

This isn't the first time I have Sharked X-Plane, so I can tell you a little bit of the back story. This Shark profile is on a GeForce 8800 running OS X 10.5.8; a lot of the time is OpenGL state sync in the driver (that is, the CPU preparing to send instructions to the card to change how it renders), and some is spent pushing vertex data in a less-than-efficient manner. This number is a lot smaller on 10.6 or an ATI card.

While this call isn't in our library, we still have to ask: can we make it faster? The answer, as it turns out, is yes. When you look at what glDrawElements is doing (by removing the data mining) you'll see most of its time is spent in gldUpdateDispatch. This is the internal call to resynchronize OpenGL state. So it's really us changing OpenGL state that causes most of the time spent in glDrawElements. If we can find a way to change less state, we get a win.

Our quad-tree comes up next with 12.9%. That's still a big number for optimization. If we could make our quad tree faster, we might notice.

Once again, I have looked at this issue before; in the case of the quad tree the problem is L2 cache misses - that is, the quad tree is slow because the CPU keeps waiting to get pieces of the quad tree from main memory. If we could change the allocation pattern or node structure of the quad tree to have better locality, we might get a win.

(How do we know it's an L2 cache issue? Shark will let you profile by L2 cache misses instead of time. If a hot spot comes up with both L2 and time, it indicates that L2 misses might be the problem . If a hot spot comes up with time but not L2 misses, it means you're not missing cache, something else is making you slow.)

Third on the list is a real surprise - this routine tends the 3-d meshes and sees if anything needs processing. To be blunt, this stat is a surprise and almost certainly a bug, and I did a double-take when I saw it. More investigation is needed; while 7.6% isn't the biggest performance item, this is an operation that shouldn't even be on the list, so there might be a case where fixing one stupid bug gets us a nice win.

plot_group_layers is doing the main per-state-change drawing - it's not a huge win to optimize because the leverage isn't very high and the algorithm is already pretty optimal - that is, real work is being done here.

Mipmap generation is done by the driver, but we if we can use some textures without auto-mipmap generation, it might be worth it.

glMapBufferARB is an example of why we need to use "all thread states" - this routine can block, and when it does, we want to see why our fps is low - because our rendering thread is getting nothing done.

glBegin is down at 2% (leverage ratio 0.02), and this is a good example of cost-benefit trade-offs. X-Plane still has some old legacy glBegin/glEnd drawing code. That code is old and nasty and could certainly be made faster with modern batched drawing calls. But look at the leverage: 0.02. That is, if we were able to improve every single case of glBegin by a huge factor (imagine we made it 99% faster!!) we'd still see only a 2% frame-rate increase.

Now 2% is better than not having 2%, bu it's the quantity of code that's the issue. We'd have to fix every glBegin to get that win, and the code might not even be that much faster. Because the code is so spread out and the leverage is low, we let it slide. (Over the long term glBegin code will be replaced, but we're not going to stop working on real features to fix this now.)

In fact, if you look at the two profiles together, the low level leverage and strategic view start to make sense . Most of that glDrawElements call is due to OBJs drawing, so it's no surprise that the airplane shows up because it has OBJs in it.

Repeatability

So the value of an optimization is only as good as its leverage, right? Well, not quite. What if one of my shaders can be optimized by 50%, but it's one of ten shaders? Well, that optimization could be worth the full 50% if I repeat the optimization on the other shaders.

In other words, if you can keep applying a trick over and over, you can start to build up real improvement even when the leverage is low. Applying an optimization to multiple code sites is a trivial example; more typically this would be a process. If I can spend a few hours and get a 1% improvement in shader code, that's not huge. But if I can do that every day for a week, that might turn into a 10% win.

So to answer the question "is 1% a lot", the answer is: yes if the leverage is there and you're going to keep beating on the code over and over.